| Summary: |

VSA100 : The heart of the monster

On the Voodoo 5 6000 we can find 4 VSA-100 chips which have the following characteristics:

|

|

The VSA-100 (Voodoo Scalable Architecture) can be seen as a big update to the Voodoo 3 in order to return in the race vis-a-vis of ATI or nVidia. For example the possibility to make a 32 bits rendering and use 2048x2048 pixels textures. At the frequance of 166MHz the theoretical bandwidth of each VSA-100 is (128 bits *166*106 Hz)/8 bits = 2.67GB/sec. Some rares Voodoo 5 also have the VSA-101 (Daytona) GPU which is a VSA-100 made in a 0.18 microns process and generates less heat.

- The SLI

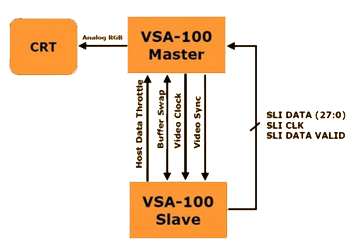

The SLI used in the VSA-100 has almost nothing to do with the one used in the Voodoo 2 cards. On the old system we were limited to 2 cards where each card was alternatively rendering a line. The VSA-100 has been designed to be used with up to 32 of it's frends. Let's see the example of the Voodoo 5 5500:

In this situation we have two connected VSA-100, one is the master and the other is slave. First of all we need to know that each chips has it's own memory, on a Voodoo 5 5500 we have 2 VSA-100 which each own 32MB of memory. Let's try to explain how it works:

- The master will analyse the picture to be rendered and cut it in zones from 1 to 128 lines to try to have the same workload on each chip.

- The master sends the datas to the slave chip.

- The salve chip renderswhat he has to do and sends the result back to the master.

- The datas of both VSA-100 are combined in the frame buffer of the master VSA-100 and displayed.

Unfortunately we didn't find any documentation on the available bandwidth between the 2 VSA-100 chips, but it is probable that it is a 128bits/166MHz link for architectural reasons...

If you look, there are some problems with this architecture, some textures can be at the same time in the momory of both VSA-100 chips if they need if for a rendering. Also we can't say that the total memory bandwidth is 2* 2.67GB/sec as it depends how the memory is accessed. Later we will see that this way to communicate between the VSA-100 chips is *ONLY* applicable in a 2 chips configuration. If we use more chips, there is a need to use an external PCI-PCI bridge, that's the case on the Voodoo 5 6000.

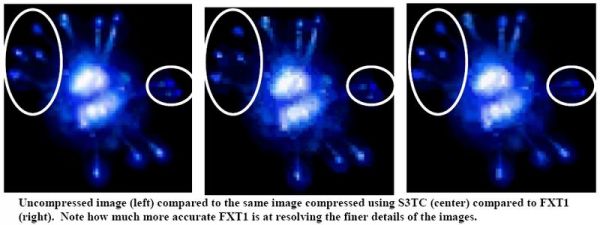

- FXT1

Another technology introduced with the VSA-100 was the FXT1 texture compression. This compression method had the advantage to be open source and could consequently be used by everyone. The main difference between FXT1 and the actually used S3TC is that it can use up to 4 compression algorithms for each image where S3TC (=DXTC) is only using one. FXT1 texture decompression is implemented in hardware in the VSA-100. The problem is that this compression was never integrated in DirectX by Mycrosoft so it wasn't widely used. However some games like the Serious Sam series use it.

The advantages to use a compression are easy to understand: increase the maximal texture size that can be used in a scene because each texture is using less memory, or if you look from another point of view you can use bigger textures and take less memory or apply multiple textures to an object (bump mapping).

- T-Buffer

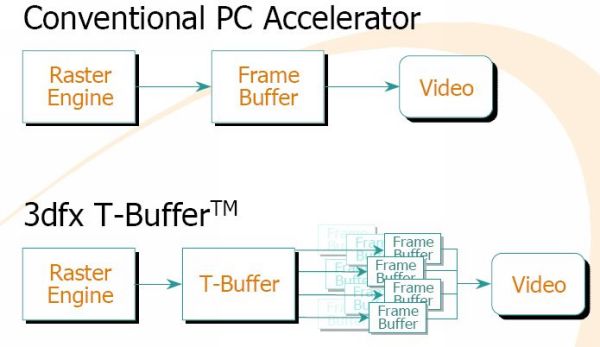

Already in 1984 people from the computer division at Lucas Arts have published a document called "Distributed Ray Tracing" which was pointing at the principal rendering problems (aliasing) in computer rendered pictures. After that, new Ray Tracing techniques have been developped which leaded to a considerable enhancement in the rendering quality. The problem with these is that they couldn't be rendered in realtime. A solution to this problem has been found in 1990 by SGI people who published a document called "The Accumulation Buffer: Hardware Support for High-Quality Rendering". This Accumulation Buffer is a memory that can temporarily keep multiple renderings of a scene and apply some efects on them. The T-Buffer by 3dfx is directly derived from it.

The T-Buffer has undoubtedly been the key element used by the marketing guys when the VSA-100 chip came out and is indeed the key element of this chip. Here we can see where this buffer is located compared to a classical graphic card:

This T-Buffer can store a couple of images of a same scene which are a very little different from each other in order to obtain some interesting visual effects like:

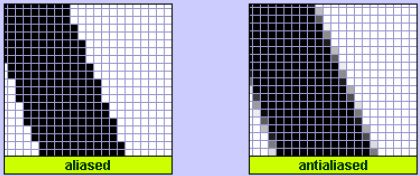

- The FSAA (a form of spatial anti-aliasing) : we will talk about this later in detail because this is by far the most interesting usage of the T-Buffer. The purpose of full scene anti-aliasing is to avoid a staircase effect or pixel popping on the images. We can clearly see an example of staircase effect on the following picture.

- The motion blur (temporal anti-aliasing): this technology can create a "blur" effect when an object is moving, a trail if you want. The goal is to make a more realistic movement rendering and at the same time to improve the impression of fluidity.

- The depth of field blur (focal anti-aliasing): this effect makes it possible to simulate an effect of focusing often used in the movies. It is really a question of "making the point" on an object as with a camera. We can see it very clearly here:

- The soft shadows: this function is used to generate realistic shadows without a sharp cut between the shadowed and lighted zone. The soft shadow function creates a half-light zone and consequently makes it look more relaistic.

- The soft reflections: let's take an example to understand this. Let us take the case of a pencil posed perpendicularly on a brushed metal plate. In reality the reflexion of the pencil on the metal plate is clear close to the zone of contact and becomes less and less clear when one moves away from the plate. It's exactly what this function tries to reproduce.

- FSAA

Like we said before, the FSAA (Full Scene Anti Aliasing) is the most used function of the T-Buffer. It permits to increase the global scene rendering quality by eliminating most of the visible artefacts. It's a special form of spatial anti-aliasing.

The most common visible problem is the staircase effect that we clearly see on this picture:

The other common visual problem is called "pixel popping" which is noticed by the disappearance or the sudden appearance of pixels when an object is moving. This appears when the polygons to be sampled are smaller than a pixel or when they are between two pixels and do not touch a sampling zone (the little dots on the schema). The result is the appearances and disappearances of lines or points when things are moving in a scene.

To solve these problems (known as aliasing), we make anti-aliasing. In theory it's not complicated to do AA: it is necessary to analyze the color of the pixels which surround the problematic one and make an average of them prior to display it. In practice to "make the average" we do super-sampling, or more precisely two subsets of it which are over-sampling and multi-sampling.

Over-sampling is the technique used by GeForce and Radeon graphic cards and consists in rendering a scene in a resolution larger than that desired one, then to reduce it by applying a filtering method (for example bilinear filterning) prior to display it. This rendering method is using an OGSS sampling.

Multi-sampling is the technique used by the VSA-100 chip and consists to render an image 2 or 4 times in the T-Buffer by shifting them a little bit each time using an RGSS sampling

On the above picture we can see that the RGSS sampling is very similar to the OGSS sampling apart thet there is a rotation angle of about 20-30°. This angle permits to the RGSS to have a better anti-aliasing quality on vertical and horizontal lines and it's easy to understand why: the sampling points are taken on each side of the vertical and horizontal axis. It is precisely the defects on the vertical and horizontal lines to which the human eye is most sensitive, visual quality is thus theoretically better in RGSS. Here we can see an example of the RGSS rendering made by the VSA-100.

FSAA can be used with every game in opposition with the other effects of the T-Buffer because it's not dependant of a software implementation (ok, apart in the drivers). For further information on the different AA methods, you should have a look at this document published by Beyond3D which is extremely interesting to read if you have time for it.

Despite of its many innovations, the VSA-100 lacked of two important features: it didn't support T&L nor Pixel Shaders. At the time that could still be justified because few games used these technologies, but when looking back from nowadays it seems that this choice was rather problematic.